Crime

Crime  Crime

Crime  Movies and TV

Movies and TV 10 Movie Franchises That Started Dark but Turned Surprisingly Soft

History

History 10 Wars That Sound Made Up (but Absolutely Happened)

Movies and TV

Movies and TV 10 Movie Adaptations That Ruined Everything for Some Fans

History

History 10 Dirty Government Secrets Revealed by Declassified Files

Weird Stuff

Weird Stuff 10 Wacky Conspiracy Theories You Will Need to Sit Down For

Movies and TV

Movies and TV 10 Weird Ways That TV Shows Were Censored

Our World

Our World 10 Places with Geological Features That Shouldn’t Exist

Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

Crime

Crime 10 Criminal Masterminds Brought Down by Ridiculous Mistakes

Movies and TV

Movies and TV 10 Movie Franchises That Started Dark but Turned Surprisingly Soft

History

History 10 Wars That Sound Made Up (but Absolutely Happened)

Who's Behind Listverse?

Jamie Frater

Head Editor

Jamie founded Listverse due to an insatiable desire to share fascinating, obscure, and bizarre facts. He has been a guest speaker on numerous national radio and television stations and is a five time published author.

More About Us Movies and TV

Movies and TV 10 Movie Adaptations That Ruined Everything for Some Fans

History

History 10 Dirty Government Secrets Revealed by Declassified Files

Weird Stuff

Weird Stuff 10 Wacky Conspiracy Theories You Will Need to Sit Down For

Movies and TV

Movies and TV 10 Weird Ways That TV Shows Were Censored

Our World

Our World 10 Places with Geological Features That Shouldn’t Exist

Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

10 Brain-Breaking Scientific Concepts

Many of us here at Listverse really enjoy messing with the heads of our readership. We know that you come here to be entertained and informed; perhaps it’s just our sterling work ethic, but on some days we feel the need to give you much, much more than you bargained for. This is one of those days.

So while you depend on us to provide a pleasant distraction from whatever part of the day in which you’re visiting, please allow us to instead give you a MAJOR distraction from the REST of the day, and perhaps tomorrow as well. Here are ten things that are going to take awhile to get your brain around; we still haven’t done it, and we write about this stuff.

Have you ever been able to tell what another person is thinking? How do you know? It’s one thing to suggest that we’re not really capable of knowing anybody else’s thoughts; it’s quite another to suggest that that person may not have any thoughts for you to know.

The philosophical zombie is a thought experiment, a concept used in philosophy to explore problems of consciousness as it relates to the physical world. Most philosophers agree that they don’t actually exist, but here’s the key concept: all of those other people you encounter in the world are like the non-player characters in a video game. They speak as if they have consciousness, but they do not. They say “ow!” if you punch them, but they feel no pain. They are simply there in order to help usher your consciousness through the world, but possess none of their own.

The concept of zombies is used largely to poke holes in physicalism, which holds that there are no things other than physical things, and that anything that exists can be defined solely by its physical properties. The “conceivability argument” holds that whatever is conceivable is possible, therefore zombies are possible. Their very possibility—vastly unlikely though it may be—raises all kinds of problems with respect to the function of consciousness, among other things—the next entry in particular.

Qualia are, simply, the objective experiences of another. It may seem simple to state that it’s impossible to know exactly what another person’s experience is, but the idea of qualia (that term, by the way, is plural; the singular is “quale”) goes quite a bit beyond the simplicity of that statement.

For example, what is hunger? We all know what being hungry feels like, right? But how do you know that your friend Joe’s experience of hunger is the same as yours? We can even describe it as “an empty, kind of rumbling feeling in your stomach”. Fine—but Joe’s feeling of “emptiness” could be completely different from yours as well. For that matter, consider “red”. Everyone knows what red looks like, but how would you describe it to a blind person? Even if we break it down and discuss how certain light frequencies produce a color we call “red”, we still have no way of knowing if Joe perceives the color red as the color you know to be, say, green.

Here’s where it gets very weird. A famous thought experiment on qualia concerns a woman who is raised in a black and white room, gaining all of her information about the world with black and white monitors. She studies and learns everything there is to know about the physical aspects of color and vision; wavelength frequencies, how the eyes perceive color, everything. She becomes an expert, and eventually knows literally all the factual information there is to know on these subjects.

Then, one day she is released from the room and gets to actually SEE colors for the first time. Doing so, she learns something about colors that she didn’t know before—but WHAT?

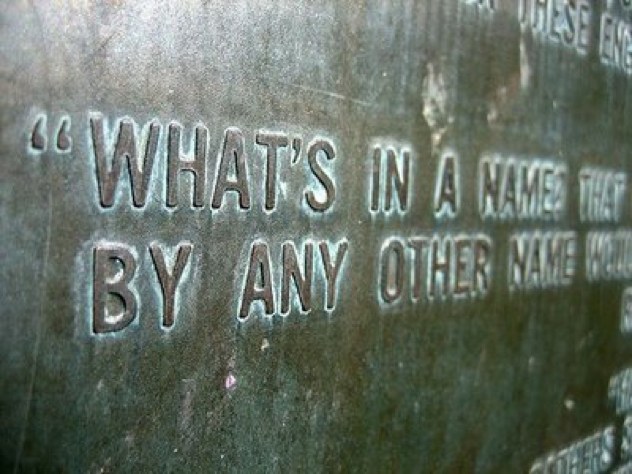

British philosopher John Stuart Mill, in the 19th century, set forth a theory of names that held for many decades—essentially, that the meaning of a proper name is whatever bears that name in the external world; simple enough. The problem with the theory arises when things do not exist in the external world, which would make sentences like, “Harry Potter is a great wizard” completely meaningless according to Mill.

German logician Gottlob Frege challenged this view with his Descriptivist theory, which holds that the meanings—semantic contents—of names are the collections of descriptions associated with them. This makes the above sentence make sense, since the speaker and presumably listener would attach the description “character from popular culture” or “fictional boy created by J.K. Rowling” to the name “Harry Potter”.

It seems simple, but in philosophy of language there had not been a distinction—until Frege—between sense and reference. That is to say, there are multiple meanings associated with words as a matter of necessity—the OBJECT to which the term refers, and the WAY in which the term refers to the object.

Believe it or not, descriptivist theory has had some pretty serious holes blown in it in recent decades, notably by American philosopher Saul Kripke in his book Naming and Necessity. Just one argument proposes (in a nutshell) that if information about the subject of a name is incorrect or incomplete, then a name could actually refer to a completely different person about which the known information would be more accurate; Kripke’s objections only get more headache-inducing from there.

The Mind-Body problem is an aspect of Dualism, which is a philosophy that basically holds that for all systems or domains, there are always two types of things or principles—for example, good and evil, light and dark, wet and dry—and that these two things necessarily exist independently of each other, and are more or less equal in terms of their influence on the system. A Dualist view of religion believes this of God and Satan, contrasting with a Monist view (which would believe, perhaps, in only one or the other, or that we are all one consciousness) or a pluralist view (which might hold that there are many gods).

The Mind-Body problem, then, is simple: what’s the relationship between body and mind? If dualism is correct, then humans should be either physical or mental entities, yet we appear to have properties of both. This causes all kinds of problems that present themselves in all kinds of ways: are mental states and physical states somehow the same thing? Or do they influence each other? If so, how? What is consciousness, and if it is distinct from the physical body, can it exist OUTSIDE the physical body? What is “self”—are “you” the physical you? Or are “you” your mind?

The problem that Dualists cannot reconcile is that there is no way to build a satisfactory picture of a being possessed of both a body AND a mind, which may bring you back to the concept of philosophical zombies up there. Unless the next example goes ahead and obliterates all of this thinking for you, which it might.

Since the release of The Matrix, we have all wondered from time to time if we could really be living in a computer simulation. We’re all kind of used to that idea, and it’s a fun idea to kick around. This doesn’t blow our minds anymore, but the “Simulation Argument” puts it into a perspective that . . . frankly, is probably going to really freak you out, and we’re sorry. You did read the title of the article, though, so we’re not TOO sorry.

First, though, consider the “Dream Argument”. When dreaming, one doesn’t usually know it; we’re fully convinced of the dream’s reality. In that respect, dreams are the ultimate virtual reality, and proof that our brains can be fooled into thinking that pure sensory input represents our true physical environment, when it actually does not. In fact, it’s sort of impossible to tell whether you may be dreaming now—or always. Now consider this:

Human beings will probably survive as a species long enough to be capable of creating computer simulations that host simulated persons with artificial intelligence. Informing the AI of its nature as a simulation would defeat the purpose—the simulation would no longer be authentic. Unless such simulations are prohibited in some way, we will almost certainly run billions of them—to study history, war, disease, culture, etc. Some, if not most, of these simulations will also develop this technology and run simulations within themselves, and on and on ad infinitum.

So, which is more likely—that we are the ONE root civilization which will first develop this technology, or that we are one of the BILLIONS of simulations? It is, of course, more likely that we’re one of the simulations—and if indeed it eventually comes to pass that we develop the technology to run such simulations, it is ALL BUT CERTAIN.

Synchronicity, aside from being a very good Police record, is a term coined by famous psychologist and philosopher Carl Jung. It is the concept of “meaningful coincidences” and Jung was partially inspired by a very strange event involving one of his patients.

Jung had been kicking around the idea that coincidences that appear to have a causal connection are in fact manifested in some way by the consciousness of the person perceiving the coincidences. One patient was having trouble processing some subconscious trauma, and one night dreamed of an insect—a golden scarab, a large and rare type of beetle. The next day, in a session with Jung and after describing the dream, an insect bounced off the window of the study in which they sat. Jung collected it—a golden scarab, very rare for the region’s climate. He released it into the room and, as the patient gathered her jaw up off the floor, proceeded to describe his theory of meaningful coincidences.

The meaning of the scarab itself—the patient was familiar with its status as a totem of death and rebirth in ancient Egyptian philosophy—was symbolic of the patient’s need to abandon old ways of thinking in order to progress with her treatment. The incident solidified Jung’s ideas about synchronicity, and its implication that our thoughts and ideas—even subconscious ones—can have a real effect somehow on the physical world, and manifest in ways that are meaningful to us.

You probably recognize by now that a main thrust of many of these concepts is an attempt to understand the nature of consciousness. The theory of Orchestrated Objective Reduction is no different, but was arrived at independently by two very smart people from two very different angles—one from mathematics (Roger Penrose, a British theoretical physicist), and one from anesthesiology (Stuart Hameroff, a University professor and anesthesiologist). They assimilated their combined research into the “Orch-OR” theory after years of working separately.

The theory is an extrapolation of Godel’s Incompleteness Theorem, which revolutionized mathematics and states that “any . . . theory capable of expressing elementary arithmetic cannot be both consistent and complete”. Basically, it proves the incompleteness of mathematics and of any defined system in general. Penrose took this a step further—stating that since a mathematician is a “system” and theorems like Godel’s are provable by human mathematicians, “The inescapable conclusion seems to be: Mathematicians are not using a knowably sound calculation procedure in order to ascertain mathematical truth. We deduce that mathematical understanding —the means whereby mathematicians arrive at their conclusions with respect to mathematical truth —cannot be reduced to blind calculation.”

This means that the human brain is not merely performing calculations—like a computer but way, way faster—but doing . . . something else. Something that no computer could ever replicate, some “non-computable process” that cannot be described by an algorithm. There are not many things in science that fit this description; quantum wave function collapse is one of them, but that opens up a completely new can of worms.

Quantum physics deals with particles (or maybe they’re waves) so small that even the act of observing them, or measuring them, can affect changes in what they’re doing. That is the fundamental idea behind the so-called Uncertainty Principle, which was first described by Werner Heisenberg, which may answer a different question that has bothered a few of you for some time.

This dual nature of quanta was proposed to help explain this. If a particle appears to be in two places at once, or acts like a wave at one point and a particle the next, or appears to pop in and out of existence—all things that are known to be par for the course at the quantum level—it may be because the act of measurement, of observation, influences what is being observed.

Because of this, while it may be possible to get an accurate representation of one state of a quantum object’s being (say, an electron’s velocity), the means being used to achieve that measurement (say, firing a photon at it to intercept it) will affect its other properties (like its location, and mass) so that a COMPLETE picture of such an object’s state of being will be impossible—those other properties become uncertain. Simple, right?

There are a number of problems with the “Big Bang” model of cosmology, not the least of which is the likelihood of a theoretical “Big Crunch” in which the expanding Universe contracts (the “oscillating universe” theory) or the ultimate heat death of the universe. One theory that eliminates all of these problems is the theory of Eternal Return—which suggests simply that there is no beginning OR end to the Universe; that it recurs, infinitely, and always has been.

The theory depends upon infinite time and space, which is by no means certain. Assuming a Newtonian cosmology, it has been proven by at least one mathematician that the eternal recurrence of the Universe is a mathematical certainty, and of course the concept shows up in many religions both ancient and modern.

This concept is central to the writings of Nietzsche, and has serious philosophical implications as to the nature of free will and destiny. It seems like a heavy, almost unbearable burden to be pinned to space and time, destined to repeat the entirety of our existence throughout a literal eternity—until you consider the alternative . . .

If the concept of a Universe with no beginning or end, in which the same events take on fixed and immovable meaning, seems heavy—then consider the philosophical concept of Lightness, which is the exact opposite. In a Universe in which there IS a beginning and there IS an end, in which everything that exists will very soon exist no more, then everything is fleeting, and nothing has meaning. Which makes this Lightness the ultimate burden to bear, in a Universe in which everything is “without weight . . . and whether it was horrible, beautiful, or sublime . . . means nothing.”

The above quote is from the appropriately titled “The Unbearable Lightness Of Being” by reclusive author Milan Kundera, which is an in-depth exploration of the philosophy which we are really not sure we ever want to read. However, Zen Buddhism endorses this concept—and teaches to rejoice in it. Indeed, many Eastern philosophies view recognition and acceptance of this condition as a form of perfection and enlightenment.

We suppose it all depends on your personal point of view, which . . . now that we think about it, is sort of the point of all of this.