History

History  History

History  Weird Stuff

Weird Stuff 10 Wacky Conspiracy Theories You Will Need to Sit Down For

Movies and TV

Movies and TV 10 Weird Ways That TV Shows Were Censored

Our World

Our World 10 Places with Geological Features That Shouldn’t Exist

Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

Movies and TV

Movies and TV 10 Box Office Bombs That We Should Have Predicted in 2025

History

History 10 Extreme Laws That Tried to Engineer Society

History

History 10 “Modern” Problems with Surprising Historical Analogs

Health

Health 10 Everyday Activities That Secretly Alter Consciousness

History

History 10 Dirty Government Secrets Revealed by Declassified Files

Weird Stuff

Weird Stuff 10 Wacky Conspiracy Theories You Will Need to Sit Down For

Movies and TV

Movies and TV 10 Weird Ways That TV Shows Were Censored

Who's Behind Listverse?

Jamie Frater

Head Editor

Jamie founded Listverse due to an insatiable desire to share fascinating, obscure, and bizarre facts. He has been a guest speaker on numerous national radio and television stations and is a five time published author.

More About Us Our World

Our World 10 Places with Geological Features That Shouldn’t Exist

Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

Movies and TV

Movies and TV 10 Box Office Bombs That We Should Have Predicted in 2025

History

History 10 Extreme Laws That Tried to Engineer Society

History

History 10 “Modern” Problems with Surprising Historical Analogs

Health

Health 10 Everyday Activities That Secretly Alter Consciousness

10 Incredible Ways Technology May Make Us Superhuman

In the last half of the twentieth century, medical science came up with some pretty astonishing ways to replace human parts that were starting to wear out. Though the idea is commonplace now, the invention of the artificial pacemaker in the ’50s must have seemed like science fiction come to life at the time; today’s innovations routinely restore a modicum of hearing to the deaf, sight to the vision-impaired, and if a pacemaker won’t cut it, we can just replace that faulty heart like the water pump in your old Ford.

These technologies that were in their infancy just a few decades ago are now so well-established as to seem downright mundane. The medical tech that is in its infancy today likewise seems like science fiction—and if history has taught us anything, it’s that this means we’ll probably see a lot of it in use very soon (if it isn’t already). Oh, and while there are certainly applications for many of these to replace those worn-out parts, many others are intended specifically to improve upon perfectly good parts in unprecedented ways.

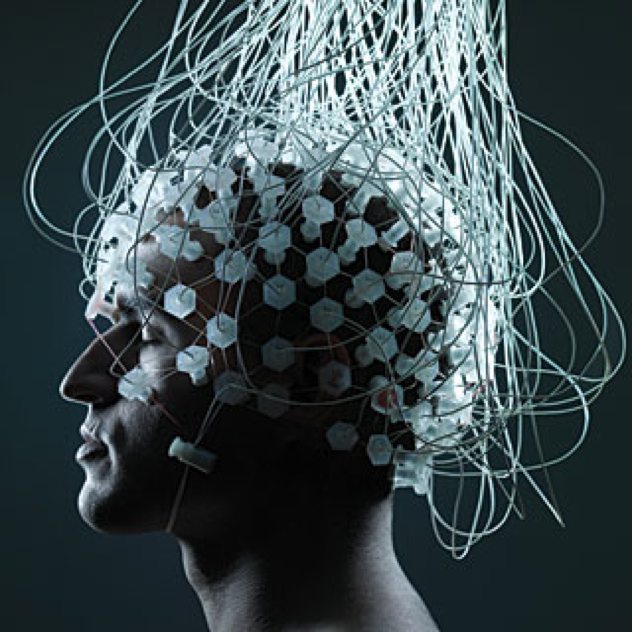

A “BCI” is exactly what it sounds like—a communication link between the human brain and an external device. BCIs have been the realm of sci-fi for decades, but believe it or not this hasn’t been speculative technology for some time—there are many different types of completely functional interfaces for a variety of applications, and the earliest devices of this type to be tested in humans showed up in the mid ’90s. And, it’s safe to say that the research is not slowing down.

It has been known since the 1920s that the brain produces electrical signals, and it was speculated since then that those signals might be directed to control a mechanical device—or vice versa. Since research into BCIs began in earnest in the ’60s (with monkeys as the usual test subjects), many different models with different levels of “invasiveness” depending upon the application have been produced, with research progressing particularly quickly within the last 15 years or so.

Most applications involve either the partial restoration of sight or hearing, or the restoration of movement to paralysis sufferers. One completely non-invasive prototype was demonstrated to enable a paralyzed stroke victim to operate a computer in early 2013. In a nutshell, the device picks up the eyes’ signals that are routed to the back of the brain, and analyzes the different frequencies to determine what the patient is looking at—enabling them to move a cursor on a screen using only eye movements, using a device that amounts to a helmet.

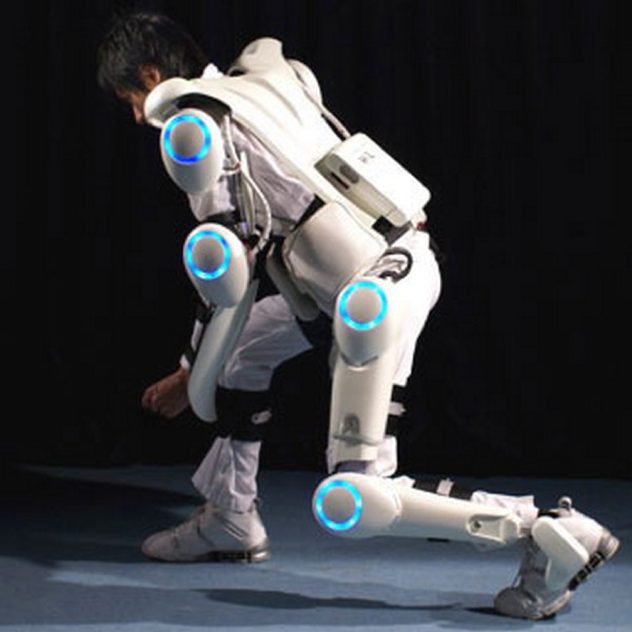

The general public’s concept of the powered exoskeleton is more like “powered battle armor” on account of the Robert Heinlein novel “Starship Troopers” and also a very popular character from an increasingly pervasive multimedia franchise. The tech that’s actually being developed is less geared toward battling giant robots and invading aliens, and more toward either restoring mobility to the disabled, or augmenting endurance and load-carrying capacity.

For example, one company manufactures a 50 pound aluminum and titanium suit called the Ekso that has seen use in dozens of hospitals around the U.S. It has made people with paralyzing spinal cord injuries able to walk, an application that was once too impractical due to the bulk and weight of such a suit.

The same technology was licensed by Lockheed Martin for their Human Universal Load Carrier (HULC, oddly enough), which has been extensively tested and may be deployed for military use within a year. It enables a normally conditioned man to carry a 200 pound load at ten miles per hour, pretty much indefinitely, without breaking a sweat. While the Ekso takes pre-programmed steps for its users, the HULC uses accelerometers and pressure sensors to provide a mechanical assist to the user’s natural movements.

We should note that a Japanese firm has produced a similar device with medical applications called “Hybrid Assistive Limb” or HAL, which—as the name of a famously murderous machine—we’re thinking might not have been such a hot idea. Oh, and the company’s name? Cyberdyne. We are not kidding.

A neural implant is any device which is actually inserted inside the grey matter of the brain. While a neural implant can be a BCI and vice versa, the terms are in no way synonymous. What exoskeletons do for the body, implants do for the brain—while most are meant to repair damaged areas and restore cognitive function, others are meant to give the brain a power assist or a pathway to external devices.

The use of neural implants for deep brain stimulation—the transmission of regularly spaced electrical impulses to specific regions of the brain—has been approved by the Food and Drug Administration to treat various maladies, with the first approval coming in 1997. It has been proven effective at treating Parkinson’s disease and dystonia, and has also been used to treat chronic pain and depression with varying degrees of efficacy.

Thus far, the most commonly used neural implants are cochlear implants (approved by the FDA in 1984) and retinal implants, both pioneered in the 1960s and proven effective at partially restoring hearing and vision, respectively. Fun fact: the inventor of the cochlear implant was Dr. House—William House, who passed away in 2012, and whose brother Howard was also a physician.

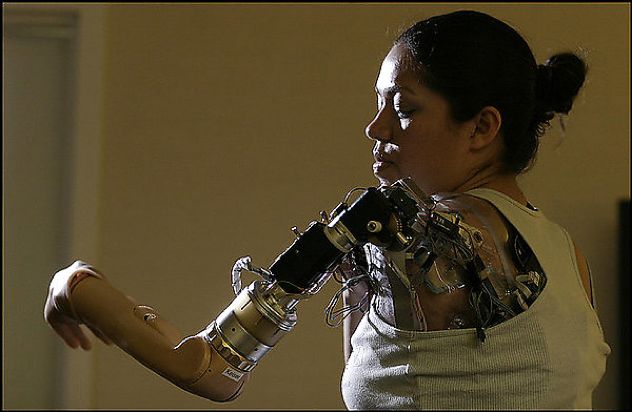

Prosthetics have been used to replace missing limbs for decades, but the modern version—cyberware—strives not for just an aesthetic replacement, but a functional one. That is, to restore a missing limb with a natural functionality and appearance. And while the use of aforementioned brain interfaces to control robotic prosthetic devices is already happening, other explorations in this field seek to remove limitations inherent to this scheme.

Many existing devices use non-invasive interfaces that detect the subtle movements of, say, chest and/or bicep muscles to control a robotic arm. Modern devices of this type are capable of some pretty fine motor movement, improving drastically in this respect over the last decade or so. Also in this field, research is underway to provide a two-way interface—a robotic prosthetic that will allow the patient to FEEL what they are touching with their artificial limb; but even this only scratches the surface of what’s being envisioned for the future of this tech.

At Harvard, the emerging fields of tissue engineering and nanotechnology have been combined to produce a “cyborg tissue”—an engineered human tissue with embedded, functional, bio-compatible electronics. Says research team lead Charles Lieber: “With this technology, for the first time, we can work at the same scale as the unit of biological system without interrupting it. Ultimately, this is about merging tissue with electronics in a way that it becomes difficult to determine where the tissue ends and the electronics begin.” And we are officially talking about full-on cyborg technology, in development right now.

Extrapolating many concepts from the previous examples into the future, consider the Exocortex. This is a theoretical information processing system that would interact with, and enhance the capabilities of, your biological brain—the true merging of mind and computer.

This doesn’t just mean that your brain would have better information storage (though it would mean that), but better processing power—exocortices would aid in high-level thinking and cognition, and if that sounds a little heavy, remember that humans have long used external systems for this purpose. After all, we couldn’t have modern mathematics and physics without the ancient technologies of writing and numbers, and computers are merely another plot on that same long, long technological graph.

Also, consider that we already use computers as extensions of ourselves. The Internet itself can be thought of as a sort of prototype of this very technology, as it gives us all access to vast stores of information; and the devices we use to access it—our computers—give us the means by which to process and assimilate that information with our brains, which are just bigger processing devices. Merging the two processors can theoretically give us the means by which to truly level the playing field in terms of human intellect, and enable us all to perform the most complex of high level mental functions with just as much ease as you are reading this article. Theoretically.

Human gene therapy and genetic engineering holds at once the most promise AND the most potential for a vast array of complications than perhaps any other scientific development ever. The understanding of evolution and the ability to modify genetic components is so new to science that it is a gross understatement to say that its implications are not yet understood; of the applications that are known to be possible (and there are many), the majority are still in the “too dangerous to even attempt on humans” phase of development.

The most obvious application is the eradication of genetic diseases. Some genetic conditions can be cured in adults by gene therapy, but the ability to test for said conditions in embryos is where the real promise lies—however, the ethical implications here are staggering. It’s possible to test not only for genetic diseases and abnormalities, but for other “conditions” like eye color and sex—and the possibility of actually being to design your baby from the ground up is absolutely within sight. Of course, we all know how expensive technology works in a free market, and it’s easy to envision a future where only the wealthy are able to afford “enhancements” to their offspring. Considering that we humans have demonstrated a very limited ability to reconcile differences in race, gender and sexuality, it’s safe to say that this technology may very well lead to the most complicated social issues in the history of humanity.

Indeed, researchers have been able to easily create mice with enhanced strength and endurance, and this field also includes stem cell research, with its promise to eventually be able to cure damn near anything. When it comes to the potential for increasing the durability and longevity of the human body, not many fields hold more promise—except perhaps for one…

Nano tech is quite prevalent in the public imagination as a likely cause of the end of the world, but this is a technology that is coming along at a lightning pace—and its medical applications, taken to their logical endpoint, hold the promise of nothing less than the eradication of all human diseases and maladies—up to and including death.

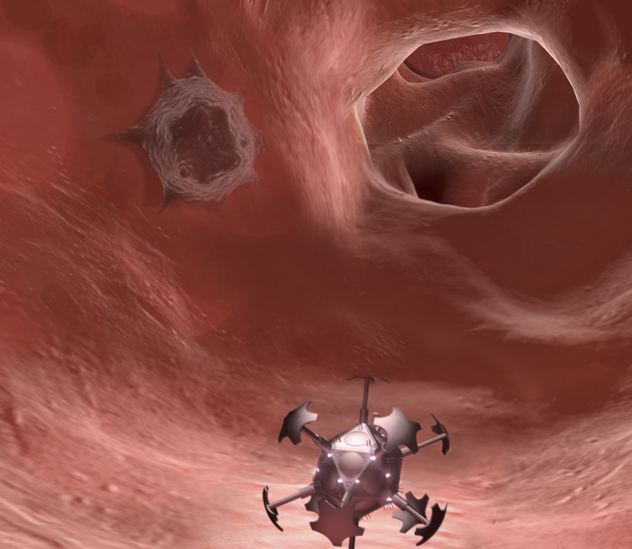

Current nano medicine applications involve new and highly accurate ways to deliver drugs to specific locations in the body, along with other treatment methods involving tiny particles—tiny on a molecular level—dispersed into the body. For example, an experimental lung cancer treatment uses nano particles that are inhaled by aerosol, settling in diseased areas of the lungs; using an external magnet, the particles are then superheated, killing the diseased cells. The body’s own response eliminates the dead cells AND the nano particles. This method has been used successfully in mice, and while it will not yet kill 100% of the diseased cells in an affected area, it’s close—and the tech is in its infancy.

Speculative uses of this technology involve the use of nano bots—microscopic, self-replicating machines that can be programmed to target cells for destruction, drug therapy or rebuilding. Of course, this could theoretically apply not only to diseased cells but damaged ones—perhaps allowing for much speedier recovery from injury and even the reversal of aging. The logical progression here ends with a remarkably durable, age-proof human body—but even if that never comes to fruition, it’s not as if this is the only way we’re attempting to cheat death with science…

It is here that we get into the realm of what has become known as “Transhumanism“—the notion that we may one day be able to surpass our physical limits, to perhaps even discard our bodies or live beyond them. This notion was first suggested as a realistic prospect by Robert Ettinger, who in 1962 wrote “The Prospect Of Immortality”, and is considered a pioneering Transhumanist and the father of Cryonics.

That is essentially the study of the preservation of humans or animals (or parts of them, like the brain) using extremely low temperatures (below ?150 °C, or ?238 °F), which was the best means of preservation available at the time Ettinger wrote his book. Today’s brain preservation studies focus more on chemical preservation, which has been demonstrated on brain tissue (but not an entire brain) and does not require the ridiculous temperatures demanded by Cryonics.

This is, of course, an inexact science—researchers in the field are well aware that it’s impossible (at this point) to determine how much, if any, of what makes up a person’s mind is preserved along with the brain, no matter how physically perfect the preservation. It’s a field that relies on the further emergence of developing, overlapping sciences that are still in the purely speculative region, such as…

As we’re able to replace more and more of our body parts with versions that have been engineered, grown in a lab or both, it stands to reason that we’ll one day reach an endpoint—a point at which every part of the human body is able to be replicated, including the brain. Right now, a collaborative effort between 15 research institutions is underway trying to create hardware which emulates different sections of the human brain—their first prototype being an 8 inch wafer containing 51 million artificial synapses.

Oh, the “software” is being replicated too—the Swiss “Blue Brain Project” is currently using a supercomputer to reverse-engineer the brain’s processing functions, with many elements of the activity of a rat brain having been successfully simulated. The leader of this project, Henry Markram, stated to the BBC that they will build an artificial brain within ten years.

Our muscles, blood, organs—artificial versions of all are in various stages of development, and at some point the prospect of assembling a fully functional artificial human body will be within sight. But even if we develop the software to run such magnificent machines—and having androids would be pretty cool—their applications for us would be incredibly significant with the development of a complementary technology, one that is less farfetched than it may seem…

We’ve previously mentioned futurist Ray Kurzweil and his insanely accurate rate of predicting new technologies. Kurzweil is of the opinion that by 2040 to 2045, we will be able to literally upload the contents of our consciousness into a computer—and he’s not even the only one who thinks so.

Of course, many argue that brain functions cannot be reduced to simple computation—that they are not “computable” and that consciousness itself poses a problem that science will never be able to solve. There is also the matter of whether an uploaded or otherwise “backed up” mind is indeed a different entity from that which was copied, a different consciousness altogether. Hopefully, these are questions that neuroscience will soon be able to answer.

But if indeed we are ever able to inject our very minds into the digital realm, the obvious implication is that our consciousness need never terminate—we need never die. We can hang out indefinitely in fantastically rendered digital worlds, and load ourselves into a Cyberdyne X-2000 Mind Vessel when we have business in the real world; transmit ourselves through space, perhaps even through time, and share knowledge instantaneously across all of humanity.

Smarter people than us are expecting these developments within your lifetime. Even if they are only partially correct, we’re going to go out on a limb and say that despite the exponential explosion of technology within the last couple decades, we ain’t seen nothing yet.