Mysteries

Mysteries  Mysteries

Mysteries  Creepy

Creepy 10 Scary Tales from the Middle Ages That’ll Keep You up at Night

Humans

Humans 10 One-of-a-kind People the World Said Goodbye to in July 2024

Movies and TV

Movies and TV 10 Holiday Movies Released at Odd Times of the Year

Politics

Politics 10 Countries Where Religion and Politics Are Inseparable

Weird Stuff

Weird Stuff 10 Freaky Times When Famous Body Parts Were Stolen

Miscellaneous

Miscellaneous 10 Interesting Things Manufacturers Stopped Making and Why

Gaming

Gaming 10 Funny Tutorials in Games

History

History 10 Fascinating Little-Known Events in Mexican History

Facts

Facts 10 Things You May Not Know about the Statue of Liberty

Mysteries

Mysteries 10 Devastating Missing Child Cases That Remain Unsolved

Creepy

Creepy 10 Scary Tales from the Middle Ages That’ll Keep You up at Night

Humans

Humans 10 One-of-a-kind People the World Said Goodbye to in July 2024

Who's Behind Listverse?

Jamie Frater

Head Editor

Jamie founded Listverse due to an insatiable desire to share fascinating, obscure, and bizarre facts. He has been a guest speaker on numerous national radio and television stations and is a five time published author.

More About Us Movies and TV

Movies and TV 10 Holiday Movies Released at Odd Times of the Year

Politics

Politics 10 Countries Where Religion and Politics Are Inseparable

Weird Stuff

Weird Stuff 10 Freaky Times When Famous Body Parts Were Stolen

Miscellaneous

Miscellaneous 10 Interesting Things Manufacturers Stopped Making and Why

Gaming

Gaming 10 Funny Tutorials in Games

History

History 10 Fascinating Little-Known Events in Mexican History

Facts

Facts 10 Things You May Not Know about the Statue of Liberty

10 Important Firsts of Modern Medicine

If you were asked to imagine an aspect of modern healthcare, there are a number of things you might come up with—an operating theatre, perhaps, or an ambulance with sirens ablaze. There are some things in medicine that are so ubiquitous it’s hard to imagine a world without them—but as with everything, there had to be a first time. This is a list of ten important breakthroughs which have all gone on to become iconic aspects of modern medicine.

Ambulances are fundamental to modern healthcare. A minute can be the difference between life and death, so getting medical personal to a patient and the patient to hospital quickly is essential. The earliest ambulances were horse-drawn, first used on battlefields to carry wounded soldiers to safety and treatment. By the 1860s, hospital-based ambulances for civilians were introduced—capable of dashing to emergencies within thirty seconds of a call.

The first motorized ambulance was introduced in Chicago in 1899, donated by five hundred local businessmen. New York received motorized ambulances a year later. They boasted a smoother ride, greater comfort, more safety for the patient, and a faster speed than horse-drawn ambulances. An article from the New York Times from September 11, 1900, describes them as having a range of twenty-five miles and a top speed of sixteen miles per hour.

The three-wheeled Pallister ambulance, introduced in 1905, was the first to be powered by gasoline. In 1909, James Cunningham, Son & Co—a hearse manufacturer—developed the first mass-produced ambulance, which was powered by a four-cylinder internal combustion engine. Rather than the modern-day sirens with which we’re all familiar, this ambulance featured a gong. How cool is that?

The defibrillator is a key prop in TV hospital dramas. A doctor, paddle in each hand, yells “clear!” and zaps a patient into consciousness. It is one of the most recognizable pieces of medical equipment, famed for its ability to bring people back from the very brink of death. It works by shocking the heart back from an abnormal rhythm to the correct pace. Contrary to many people’s perception, it doesn’t actually restart a stopped heart. These days, automated defibrillators can often be found outside hospitals—and like fire extinguishers, they can save lives even when used by completely untrained passersby.

The very first defibrillation was performed in 1947. Since the start of the twentieth century, experiments on dogs had shown that there could be some benefit to shocking a heart in ventricular fibulation (VF), a type of abnormal rhythm. Surgeon Claude Beck was a proponent of the potential of this treatment. He was operating on a fourteen-year-old boy when the heart, irritated by anesthesia, went into VF. The heart was massaged by hand for forty-five minutes, and then shocks from paddles were applied directly, restoring normal rhythm. The boy went on to make a full recovery—and Hollywood’s favorite medical device was born.

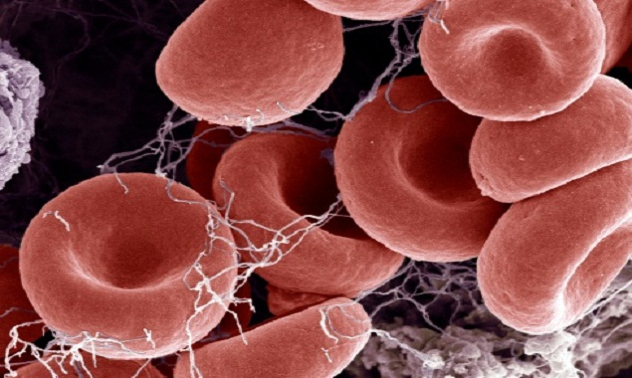

Blood transfusion is vital in saving lives. In the popular imagination, it involves blood being pumped into an accident victim by a team of ER doctors—but blood donations also allow life-saving surgery, and a wide range medical treatments.

The history of blood transfusion goes back to the seventeenth century. Early experiments were carried out between animals, and also from animals to humans. Eventually human-to-human transfusion was tried, but one key problem was that blood left outside the body for just a few minutes would coagulate and eventually become solid. A donor needed to give their blood directly to the receiver. This presented obvious practical issues.

The very first donation of blood that didn’t come directly from donor to recipient was volunteered by Albert Hustin, a Belgian doctor, in 1914. He mixed the blood with sodium citrate and glucose, as an anti-coagulant.

The same procedure was repeated by Luis Agote in Argentina a few months later. The technique of “citration” allowed blood to be donated and stored for later use. Today, ninety-two million people donate blood each year worldwide, helping to save millions of lives.

If you can give blood, please do so. Readers in the US can visit http://www.americasblood.org, or you can search for blood donation centers wherever you are in the world. You will save lives—and can even read Listverse on your phone while you do it.

Medical journals are the basis upon which medical knowledge is shared and subjected to peer review. The oldest medical journal still in print today is the New England Journal of Medicine, which was first published in 1812. There are now hundreds of thousands of scientific journals published each year, and many of these are dedicated to medicine.

The earliest journal dedicated entirely to medicine was published in French in 1679, and the first English-language medical journal—“Medicina Curiosa”—came five years later.

Medicina Curiosa’s full title was “Medicina Curiosa: or, a Variety of new Communications in Physick, Chirurgery, and Anatomy, from the Ingenious of many Parts of Europe, and Some other Parts of the World.” Its first issue was published on June 17, 1684, and featured instructions for treating ear pain and a tale about death from the bite of a rabid cat. Sadly, the journal lasted only two issues.

Cholera was one of the most feared diseases in nineteenth-century Europe. It triggered a flurry of medical research and subsequent advances—and the hunt for its cause features later in this list. There was no known cure for cholera. As a bacterial infection, it took more than a hundred years—and the invention of antibiotics—to come up with a way of targeting the illness. Treatment in the mid-1800s was focused on relieving the symptoms. Cholera often killed its victims by causing severe dehydration, since people who were stricken by it could produce up to five gallons of diarrhea per day. Simple consumption of water isn’t adequate to rehydrate a person with cholera.

The physician Thomas Latta, like many of his time, took a keen interest in discussions over the disease. A doctor named W. B. O’Shaughnessy had lectured about the idea of intravenous injection, but had never tried it in practice. Latta was inspired, and decided that the procedure should at least be attempted. He described his decision, writing, “I at length resolved to throw the fluid immediately into the circulation. In this, having no precedent to direct me, I proceeded with much caution.”

He pumped six pints of fluid into an aged female suffering from the effects of cholera, and witnessed a remarkable—practically instant—improvement. Unfortunately, she died several hours later after having been turned over to the hospital surgeon, who failed to repeat the procedure. Regardless, the success of the new treatment was taken on board by many other doctors, and it quickly rose to prominence. The enormous potential of intravenous fluids wasn’t fully realized until the end of the nineteenth century; today, the IV drip is an iconic piece of medical equipment, and helps to keep alive even the most severely ill among us.

Almost everyone reading this will have taken a powdered pill at some point in their lives. Uncountable numbers of pills are produced each year; a single pill factory will often produce an output numbering in the billions.

While pills in some form go back thousands of years, they were often little more than squished up bits of plant matter. Early nineteenth century attempts to produce pills based on specific chemicals, ran into many problems. Coatings would often fail to dissolve, and the moisture required in pill production could often deactivate ingredients.

In 1843, English artist William Brockedon was facing similar problems with graphite pencils. So he invented a machine which was able to press graphite powder into a solid lump, thereby producing high-quality drawing tools. A drug manufacturer saw that the device had potential for other uses, and Brockedon’s invention was soon being used to create the very first powder-based tablets. This technology was adapted to mass manufacturing before the end of the century, giving rise to the pill-fueled world we live in today.

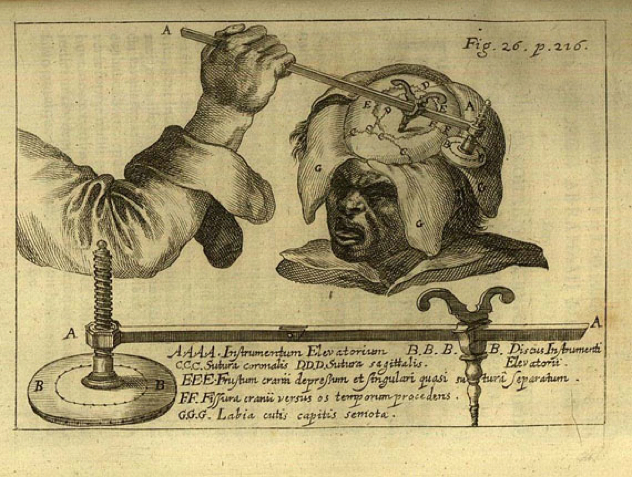

Modern surgery is certainly capable of spectacular feats—but these are only made possible by anesthesia. The ability to sedate a patient, rendering them motionless and free of pain, is essential in almost any invasive procedure. The first reputable, recorded instance of surgery under general anesthesia took place in 1804, performed by Japanese doctor Seishu Hanaoka.

Hanaoka gave a mixture of plant matter to a sixty-year-old woman suffering from breast cancer. Called tsusensan, this mixture contained a number of active ingredients that make a person impervious to pain after around two to four hours, ultimately knocking them unconscious for up to a day.

While this mixture was not as safe as modern anesthetics, it was certainly effective—and its effects allowed Hanaoka to perform a partial mastectomy. He continued to use the mixture to perform a number of operations. By the time of his death over thirty years later, he’d operated more than one hundred and fifty times on cases of breast cancer alone, at a time when the idea was just beginning to dawn in the Western world.

We’ve mentioned before how important the invention of vaccination was to the world, and the amazing things it’s achieved. One of the most important factors in vaccination is herd immunity, ensuring that enough people are immunized to prevent the spread of a disease through a community. This helps protect those people who for various reasons can’t be immunized, such as young babies or cancer patients. Achieving herd immunity requires a large percentage of uptake—often more than ninety-five percent of a population. Persuading that many people to do anything requires a lot of effort, so a lot of vaccination is government-mandated.

In 1840, the United Kingdom passed the “Vaccination Act,” which provided free vaccinations for the poor. By 1853, vaccination was compulsory for all babies younger than three months—and in 1867, everyone under the age of fourteen had to be vaccinated against smallpox. Not complying with the laws could land you a fine or even imprisonment.

While this use of compulsion made great headway in eradicating smallpox, it also gave birth to the anti-vaccination movement. Many saw it as an intrusion into personal liberty, and there were riots in many parts of the UK. In 1898, the laws were relaxed to allow people to conscientiously object to receiving vaccines—but the benefits of vaccination programs to public health are well-supported with evidence.

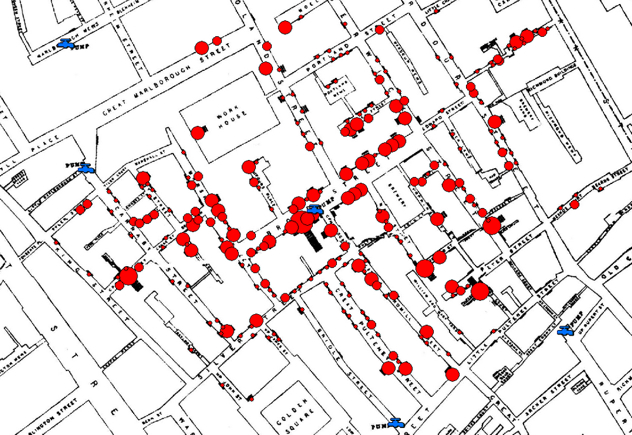

Epidemiology can be summarized as the study of illness in a population. Epidemiologists use observation and statistics to find patterns, causes, and ways to prevent or treat disease. It is the basic science behind public health policy. The word derives from epidemic, and was originally used to describe their study, before taking on a broader meaning.

Dr John Snow, who is considered the “father of epidemiology,” was responsible for the first and most classic example of the uses of epidemiology. In the mid-nineteenth century, the prevailing theory was that cholera and many other diseases were caused by miasmas, which are basically poisonous gases. Snow, on the other hand, was an early proponent of germ theory—though he opted to keep this quiet as very few respected it at the time. He believed that cholera was possibly spread by polluted water, which he cleverly described as potentially containing a “poison”.

During an outbreak in London in the 1850s, Snow analyzed the water supplies of those killed. He found that many of the victims had been drinking water whose source contained sewage.

Critics still weren’t convinced—so when another outbreak occurred, Snow made a map of all of the victims, and found that a whole cluster of them lived near a particular water pump. Many of those who didn’t live near the pump could remember drinking from it in passing. Nearby communities with separate water supplies weren’t impacted by the outbreak. Snow persuaded authorities to remove the pump’s handle, and the epidemic soon ceased. Unfortunately, the established miasma theory was still firmly entrenched in accepted theory.

Snow struggled to persuade any but a small minority of the truth of his own theory. He died of a stroke in 1858, largely unrecognized for his pioneering work. It was decades later that his efforts were rediscovered, giving rise to an entire field of science and earning him a deserved place in medical history.

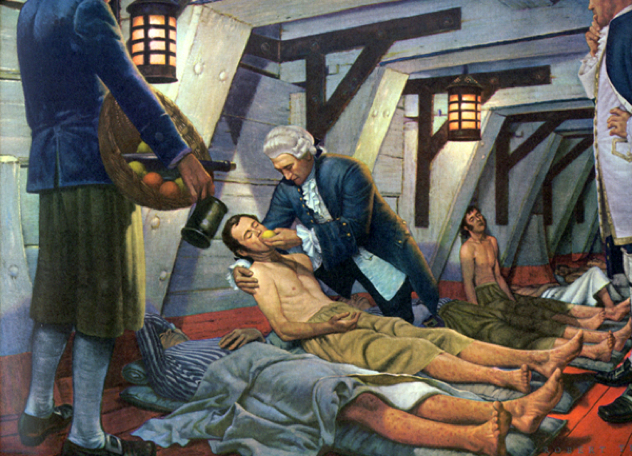

The clinical trial allows us to find out what works—and what doesn’t work—in medicine. Other types of evidence, such as anecdotes or expert opinion, are comparatively unreliable. You can’t rely on an anecdote to say a drug cured you, because you may have simply recovered on your own, without the aid of the drug.

The only way to know that a medical intervention is efficacious—that is to say, that it has more than a placebo effect—is to test it on groups of people and see if there is a pattern of improvement among those receiving the treatment. It’s the core to almost all medical research done today.

The first clinical trial was carried out on a ship. Twelve patients suffering from scurvy were divided into six groups of two, and each group was subjected to different remedies. Vinegar was tried on one group, and sea water on another—but the real breakthrough came when one group was given oranges and lemons. We know now that scurvy is a disease caused by lack of vitamin C, so the group receiving citrus showed a marked improvement. Within six days, the two sailors receiving oranges and lemons had almost fully recovered; one was able to return to duty, the other was appointed to nurse the remaining patients due to his improved health.

James Lind, the doctor responsible for the test, concluded: “I shall here only observe that the result of all my experiments was that oranges and lemons were the most effectual remedies for this distemper at sea . . . perhaps one history more may suffice to put this out of doubt.” Not only were his conclusions about scurvy shown to be true; he had unwittingly established the core of medical experimentation, as it would remain almost three hundred years later.

Alan is an aspiring writer trying to kick-start his career with an awesome beard and an addiction to coffee. You can hear his bad jokes by reading them aloud to yourself from Twitter where he is @SkepticalNumber or you can email him at [email protected].

![Top 10 Most Important Nude Scenes In Movie History [Videos] Top 10 Most Important Nude Scenes In Movie History [Videos]](https://listverse.com/wp-content/uploads/2019/09/sharonstone-150x150.jpg)