Our World

Our World  Our World

Our World  Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

Movies and TV

Movies and TV 10 Box Office Bombs That We Should Have Predicted in 2025

History

History 10 Extreme Laws That Tried to Engineer Society

History

History 10 “Modern” Problems with Surprising Historical Analogs

Health

Health 10 Everyday Activities That Secretly Alter Consciousness

History

History Top 10 Historical Disasters Caused by Someone Calling in Sick

Animals

Animals 10 New Shark Secrets That Recently Dropped

Movies and TV

Movies and TV 10 Forgotten Realities of Early Live Television Broadcasts

Our World

Our World 10 Places with Geological Features That Shouldn’t Exist

Crime

Crime 10 Dark Details of the “Bodies in the Barrels” Murders

Animals

Animals The Animal Kingdom’s 10 Greatest Dance Moves

Who's Behind Listverse?

Jamie Frater

Head Editor

Jamie founded Listverse due to an insatiable desire to share fascinating, obscure, and bizarre facts. He has been a guest speaker on numerous national radio and television stations and is a five time published author.

More About Us Movies and TV

Movies and TV 10 Box Office Bombs That We Should Have Predicted in 2025

History

History 10 Extreme Laws That Tried to Engineer Society

History

History 10 “Modern” Problems with Surprising Historical Analogs

Health

Health 10 Everyday Activities That Secretly Alter Consciousness

History

History Top 10 Historical Disasters Caused by Someone Calling in Sick

Animals

Animals 10 New Shark Secrets That Recently Dropped

Movies and TV

Movies and TV 10 Forgotten Realities of Early Live Television Broadcasts

10 More Common Faults in Human Thought

This list is a follow up to Top 10 Common Faults in Human Thought. Thanks for everyone’s comments and feedback; you have inspired this second list! It is amazing that with all these biases, people are able to actually have a rational thought every now and then. There is no end to the mistakes we make when we process information, so here are 10 more common errors to be aware of.

The confirmation bias is the tendency to look for or interpret information in a way that confirms beliefs. Individuals reinforce their ideas and attitudes by selectively collecting evidence or retrieving biased memories. For example, I think that there are more emergency room admissions on nights where there is a full moon. I notice on the next full moon that there are 78 ER admissions, this confirms my belief and I fail to look at admission rates for the rest of the month. The obvious problem with this bias is that that it allows inaccurate information to be held as true. Going back to the above example, suppose that on average, daily ER admissions are 90. My interpretation that 78 are more than normal is wrong, yet I fail to notice, or even consider it. This error is very common, and it can have risky consequences when decisions are based on false information.

The Availability heuristic is gauging what is more likely based on vivid memories. The problem is individuals tend to remember unusual events more than everyday, commonplace events. For example, airplane crashes receive lots of national media coverage. Fatal car crashes do not. However, more people are afraid of flying than driving a car, even though statistically airplane travel is safer. Media coverage feeds into this bias; because rare or unusual events such as medical errors, animal attacks and natural disasters are highly publicized, people perceive these events as having a higher probability of happening.

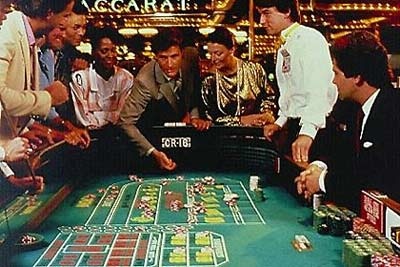

Illusion of Control is the tendency for individuals to believe they can control or at least influence outcomes that they clearly have no influence on. This bias can influence gambling behavior and belief in the paranormal. In studies conducted on psychokinesis, participants are asked to predict the results of a coin flip. With a two-sided fair coin, participants will be correct 50% of the time. However, people fail to realize that probability or pure luck is responsible, and instead see their correct answers as confirmation of their control over external events.

Interesting Fact: when playing craps in a casino, people will throw the dice hard when they need a high number and soft when they need a low number. In reality, the strength of the throw will not guarantee a certain outcome, but the gambler believes they can control the number they roll.

The Planning fallacy is the tendency to underestimate the time needed to complete tasks. The planning fallacy actually stems from another error, The Optimism Bias, which is the tendency for individuals to be overly positive about the outcome of planned actions. People are more susceptible to the planning fallacy when the task is something they have never done before. The reason for this is because we estimate based on past experiences. For example, if I asked you how long it takes you to grocery shop, you will consider how long it has taken you in the past, and you will have a reasonable answer. If I ask you how long it will take you to do something you have never done before, like completing a thesis or climbing Mount Everest, you have no experience to reference, and because of your inherent optimism, you will guesstimate less time than you really need. To help you with this fallacy, remember Hofstadter’s Law: It always takes longer than you expect, even when you take into account Hofstadter’s Law.

Interesting Fact: “Realistic pessimism” is a phenomenon where depressed or overly pessimistic people more accurately predict task completion estimations.

The Restraint Bias is the tendency to overestimate one’s ability to show restraint in the face of temptation, or the “perceived ability to have control over an impulse,” generally relating to hunger, drug and sexual impulses. The truth is people do not have control over visceral impulses; you can ignore hunger, but you cannot wish it away. You might find the saying: “the only way to get rid of temptation is to give into it” amusing, however, it is true. If you want to get rid of you hunger, you have to eat. Restraining from impulses is incredibly hard; it takes great self-control. However, most people think they have more control than they actually do. For example, most addicts’ say that they can “quit anytime they want to,” but this is rarely the case in real life.

Interesting Fact: unfortunately, this bias has serious consequences. When an individual has an inflated (perceived) sense of control over their impulses, they tend to overexpose themselves to temptation, which in turn promotes the impulsive behavior.

The Just-World Phenomenon is when witnesses of an injustice, in order to rationalize it, will search for things that the victim did to deserve it. This eases their anxiety and allows them to feel safe; if they avoid that behavior, injustice will not happen to them. This peace of mind comes at the expense of blaming the innocent victim. To illustrate this, a research study was done by L. Carli of Wellesley College. Participants were told two versions of a story about interactions between a man and a woman. In both versions, the couple’s interactions were exactly the same, at the very end, the stories differed; in one ending, the man raped the woman and in the other, he proposed marriage. In both groups, participants described the woman’s actions as inevitably leading up to the (different) results.

Interesting Fact: On the other end of the spectrum, The Mean World Theory is a phenomenon where, due to violent television and media, viewers perceive the world as more dangerous than it really is, prompting excessive fear and protective measures.

The Endowment Effect is the idea that people will require more to give up an object than they would pay to acquire it. It is based on the hypothesis that people place a high value on their property. Certainly, this is not always an error; for example, many objects have sentimental value or are “priceless” to people, however, if I buy a coffee mug today for one dollar, and tomorrow demand two dollars for it, I have no rationality for asking for the higher price. This happens frequently when people sell their cars and ask more than the book value of the vehicle, and nobody wants to pay the price.

Interesting Fact: this bias is linked to two theories; “loss aversion” says that people prefer to avoid losses rather than obtain gains, and “status quo” bias says that people hate change and will avoid it unless the incentive to change is significant.

A Self-Serving Bias occurs when an individual attributes positive outcomes to internal factors and negative outcomes to external factors. A good example of this is grades, when I get a good grade on a test; I attribute it to my intelligence, or good study habits. When I get a bad grade, I attribute it to a bad professor, or poorly written exam. This is very common as people regularly take credit for successes but refuse to accept responsibility for failures.

Interesting Fact: when considering the outcomes of others, we attribute causes exactly the opposite as we do to ourselves. When we learn that the person who sits next to us failed the exam, we attribute it to an internal cause: that person is stupid or lazy. Likewise, if they aced the exam, they got lucky, or the professor likes them more. This is known as the Fundamental Attribution Error.

Cryptomnesia is a form of misattribution where a memory is mistaken for imagination. Also known as inadvertent plagiarism, this is actually a memory bias where a person (inaccurately) recalls producing an idea or thought. There are many proposed causes of Cryptomnesia, including cognitive impairment, and lack of memory reinforcement. However, it should be noted that there is no scientific proof to validate Cryptomnesia. The problem is that the testimony of the afflicted is not scientifically reliable; it is possible that the plagiarism was deliberate and the victim is a dirty thief.

Interesting Fact: False Memory Syndrome is a controversial condition where an individual’s identity and relationships are affected by false memories that are strongly believed to be true by the afflicted. Recovered Memory Therapies including hypnosis, probing questions and sedatives are often blamed for these false memories.

The Bias blind spot is the tendency not to acknowledge one’s own thought biases. In a research study conducted by Emily Pronin of Princeton University, participants were described different cognitive biases such as the Halo Effect and Self-Serving Bias. When asked how biased the participants themselves were, they rated themselves as less biased than the average person.

Interesting Fact: Amazingly, there is actually a bias to explain this bias (imagine that!). The Better-Than-Average Bias is the tendency for people to inaccurately rate themselves as better than the average person on socially desirable skills or positive traits. Coincidentally, they also rate themselves as lower than average on undesirable traits.

This is a bonus because it attempts to explain cognitive biases. Attribute substitution is a process individuals go through when they have to make a computationally complex judgment. Instead of making the difficult judgment, we unconsciously substitute an easily calculated heuristic (Heuristics are strategies using easily accessible, though loosely related, information to aid problem solving). These heuristics are simple rules that everyone uses everyday when processing information, they generally work well for us; however, they occasionally cause systematic errors, aka, cognitive biases.