Weird Stuff

Weird Stuff  Weird Stuff

Weird Stuff  Miscellaneous

Miscellaneous 10 LEGO Facts That Will Toy with Your Mind

Misconceptions

Misconceptions 10 Widespread Historical Myths and the Texts That Started Them

Crime

Crime 10 Incredible Big-Time Art Fraudsters

Movies and TV

Movies and TV 10 Most Influential Fictional Objects in Cinema History

Our World

Our World Top 10 Real Almost‑Cities That Never Materialized

Technology

Technology 10 Unsettling Ways Big Brother Is (Likely) Spying on You

Music

Music 10 Chance Encounters That Formed Legendary Bands

Space

Space 10 Asteroids That Sneaked Closer Than Our Satellites

Sport

Sport The 10 Least Credible Superstars in Professional Sports

Weird Stuff

Weird Stuff 10 of History’s Greatest Pranks & Hoaxes

Miscellaneous

Miscellaneous 10 LEGO Facts That Will Toy with Your Mind

Misconceptions

Misconceptions 10 Widespread Historical Myths and the Texts That Started Them

Who's Behind Listverse?

Jamie Frater

Head Editor

Jamie founded Listverse due to an insatiable desire to share fascinating, obscure, and bizarre facts. He has been a guest speaker on numerous national radio and television stations and is a five time published author.

More About Us Crime

Crime 10 Incredible Big-Time Art Fraudsters

Movies and TV

Movies and TV 10 Most Influential Fictional Objects in Cinema History

Our World

Our World Top 10 Real Almost‑Cities That Never Materialized

Technology

Technology 10 Unsettling Ways Big Brother Is (Likely) Spying on You

Music

Music 10 Chance Encounters That Formed Legendary Bands

Space

Space 10 Asteroids That Sneaked Closer Than Our Satellites

Sport

Sport The 10 Least Credible Superstars in Professional Sports

10 Times Artificial Intelligence Displayed Amazing Abilities

Machines are getting smarter. They have reached the point where they learn by themselves and make their own decisions. The consequences can be downright freaky. There are machines that dream, read words in people’s brains, and evolve themselves into art masters.

The darker skills are enough to make anyone wear an anti-AI device, which is being developed. Some AI systems show signs of mental illness and prejudice, while others are too dangerous for release to the public.

10 Deepfakes

In 2019, a video was released on YouTube. It showed different clips of the famous Mona Lisa. Only this time, the painting was disturbingly alive. It showed the woman moving her head and looking around, and her lips moved in silent conversation.

The Mona Lisa looked more like a movie star doing an interview than a canvas. This is a classic example of a deepfake. These living portraits are created by convolutional neural networks.

This kind of AI processes visual information similarly to a human brain. It took hard work to teach the AI the complexities of the human face so that it could turn a still image into a realistic video.

The system first had to learn how facial features behaved, which was no easy feat. As one scientist explained, a 3-D model of a head has “tens of millions of parameters.”[1]

The AI also harvested information from three living models to produce the clips. This is why the woman is recognizable as the Mona Lisa, but each one retained traces of the models’ personalities.

9 AI Is Prejudiced

Fighting prejudice is tough. Ask anyone who’s ever experienced discrimination based on their bodies or beliefs. Another layer of this scourge recently arose in the form of AI.

A few years ago, Twitter trolls needed less than 24 hours to turn Microsoft’s new chatbot Tay into a neo-Nazi. However, research found that trolls are not the sole reason that AI thumbs its nose at women and the elderly. Neural networks learn biases from human language while processing information from websites.

Recently, scientists developed a word-association test for GloVe (Global Vectors for Word Representation). This AI is widely used in word representation tasks. At the end of the experiment, even the researchers were astonished.[2]

They had found every bias they could possibly test for in GloVe. Among others, it associated more negative things with the names of African-Americans and old people and linked women more to family terms than careers.

The fact is that society often gives certain groups more grief—and AI systems pick up on that. The difference is that humans understand fairness and can choose not to discriminate against someone; computers cannot.

8 It Sleeps Like A Human

Sleep improves cognition and refreshes the body. There is also evidence that snoozing allows neurons to remove unnecessary memories made during the day. This is crucial for brain health and possibly why sleep boosts cognition.

In 2019, scientists programmed the ability to sleep into an ANN (artificial neural network) named a Hopfield network. Inspired by the sleeping mammal brain, the ANN was “awake” while online and slept offline.

Going offline did not mean that the AI was switched off. Mathematically implemented human sleep patterns gave it something similar to REM (rapid eye movement) and slow-wave sleep. REM is believed to store important memories, while the slow-wave phase prunes unnecessary ones.

Incredibly, the ANN also appeared to dream. While offline, it cycled through everything it learned that day and woke up with greater memory capacity. When not allowed to nap, the ANN was like a sleep-deprived human—its ability to learn was significantly reduced. This discovery could one day make sleep mandatory for all AI systems.[3]

7 Anti-AI AI

Technology is rapidly evolving, and the bleakest prediction concerns our existence being threatened by rogue AI. Not just any artificial intelligence, but exceptionally powerful and self-evolving systems.

In 2017, Australian researchers took just five days to develop an anti-AI device. It was geared to solve a problem that people already face—AI pretending to be a real person. The wearable prototype was designed to alert its owner if an artificial impostor was encountered.

It studied synthetic voices and draws on Google’s Tensorflow, a machine learning software. When in use, the prototype captures all voices in its presence and sends it to Tensorflow.

Upon detecting a human being, the device does nothing. However, if the speaker is synthetic, the system actually sends a chill down one’s spine. Its creators decided that the “you’re talking to a clone alert” should be subtle. Instead of a chime or buzz, a thermoelectric element cools the back of the neck.[4]

6 Ai-Da

A robot named Ai-Da is the darling of the art scene and, perhaps, the bane of struggling artists everywhere. It churns out complex paintings, sculptures, and sketches. What is so remarkable is that the machine is teaching itself increasingly sophisticated ways to be creative.

Based in Oxford, Ai-Da’s works in the niche of shattered light abstraction are particularly noteworthy. In fact, they equal paintings created by the best abstract painters of today.

The machine has other skills, too. It talks, walks, and holds a paintbrush and pencil. It is also the first AI to hold an art exhibition. The pieces have been described as “hauntingly beautiful” with topics that include politics and tributes to famous people.

Ai-Da was “born” in 2017. The AI was commissioned by Aidan Meller, an art curator. To build the robot, he approached a Cornwall robotics firm and engineers in Leeds. The latter designed the hand the AI uses with such brilliance. Intriguingly, not even those closest to the machine can predict its true production capacity or what it will create next.[5]

5 Facial Prediction From Voice Clips

In the future, it could become impossible to place an anonymous phone call. There is an AI system that listens to a short recording of somebody’s voice and then predicts what the person looks like.

The AI trained at the Massachusetts Institute of Technology (MIT), consuming online videos to learn how to draw faces based on nothing but voices. The reconstruction results were rough but definitely showed a likeness to the living individuals. In fact, they were so similar that it made the MIT team uncomfortable.

As with many technologies, there existed a risk that the voice-to-face AI could become a tool of abuse. For instance, a person doing a phone interview could be discriminated against based on his looks. However, on the flip side, this AI could help law enforcement compile pictures of stalkers making threatening calls or kidnappers with ransom calls.

MIT plans to maintain an ethical approach to the AI system’s training and future implementation.[6]

4 Norman

At MIT’s Media Lab is a neural network unlike any other. Called Norman, the AI system is disturbing. For once, it is not about what it can unleash in the public sector. Instead, Norman’s “mind” filled itself with dark thoughts. They are downright gruesome and violent.

Researchers encountered this quirk when they presented the AI with inkblot tests. Psychologists use them to find out more about a patient’s underlying mentality. When one inkblot was shown to other AI networks, they respectively saw a bird and an airplane. Norman saw a man who had been shot and dumped from a car. It was tame in comparison to another inkblot, which Norman described as a man getting pulled into a dough machine.

The abnormal behavior has been likened to mental illness. Scientists even ventured as far as calling it “psychotic.” However, the very thing that led this AI astray could help it to get back on track. The more information neural networks receive, the more they refine their independent choices. MIT opened an inkblot survey to the public, hoping that Norman would fix itself by learning from human responses.[7]

3 AI Reads Words Inside The Brain

In 2018, three different studies taught AI to turn brain waves into words. To do this, electrodes had to be connected directly to the brain. For ethical reasons, this cannot be done with healthy patients.

The scientists received permission from volunteers with brains already exposed for unrelated surgical reasons. While some listened to audio files and others read out loud, a computer recorded their brain waves.

The first study allowed the AI to digest the data with “deep learning.” This describes a computer solving a problem with little to no human supervision. After it converted the electrical brain signals through a voice synthesizer, the words were recognizable around 75 percent of the time.

The second study used a mixture of patterns made by firing neurons and speech sounds to teach the AI. The resulting audio files had the clarity of a microphone recording.

The third study used another approach—analyzing the brain area that turns speech into muscle movements. Listeners managed to understand the AI’s words 83 percent of the time.[8]

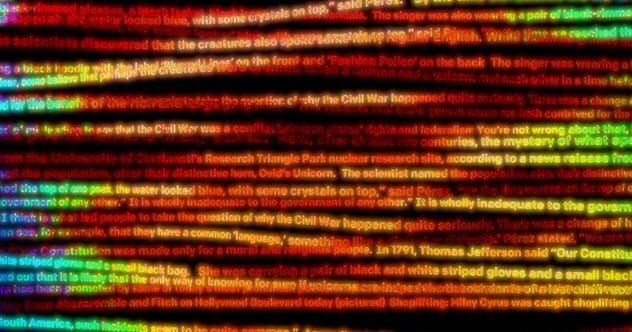

2 AI Too Dangerous For The Public

Its name is dull. However, GPT-2 is said to be so dangerous that its developers refused to release the full version to the public. GPT-2 is a language prediction system. That may sound as boring as its name, but in the wrong hands, this AI can be devastating.

GPT-2 was designed to generate original text based on a written example. Although not always perfect, the AI’s synthetic material was too authentic most of the time. It could generate believable news stories, write essays about the Civil War, and create its own story with characters from Tolkien’s The Lord of the Rings. All it needed was a human prompt, and the artificial juices would flow.

OpenAI, the nonprofit group that spawned this advanced AI, said in 2019 that only a restricted version would appear for sale. The organization feared that the system could worsen the fake news epidemic, exploit people on social media, and impersonate real individuals.[9]

1 AI Is Turning Self-Aware

Artificial networks display advanced abilities, but self-awareness is an important human trait at which AI has persistently failed. In 2015, that border blurred. A New York robotics laboratory tested three off-the-shelf Nao robots.

The tiny humanoids were told that a pill had silenced two of them. None were given any medication, but two were muted by a button pushed by a human. The robots had to figure out which of them could still talk, a task at which they failed. This failure was designed to happen because all the Nao models tried to say, “I don’t know.”

Only then did one robot discover that it could still talk. The robot exclaimed, “Sorry, I know now! I was able to prove that I was not given a dumbing pill.”

It passed the test, which was not as simple as it sounds. The Nao had to understand the human’s question, “Which pill did you receive?” Upon hearing itself say, “I don’t know,” it had to grasp that the response came from itself—and that it had voiced its own realization of not receiving any pills.

This was the first time that AI managed to ace the so-called wise-men puzzle designed to test self-awareness.[10]

Read more frightening facts about artificial intelligence on Top 10 Scary Facts About Artificial Intelligence and 10 Remarkable But Scary Developments In Artificial Intelligence.